4.2.1. Low budget

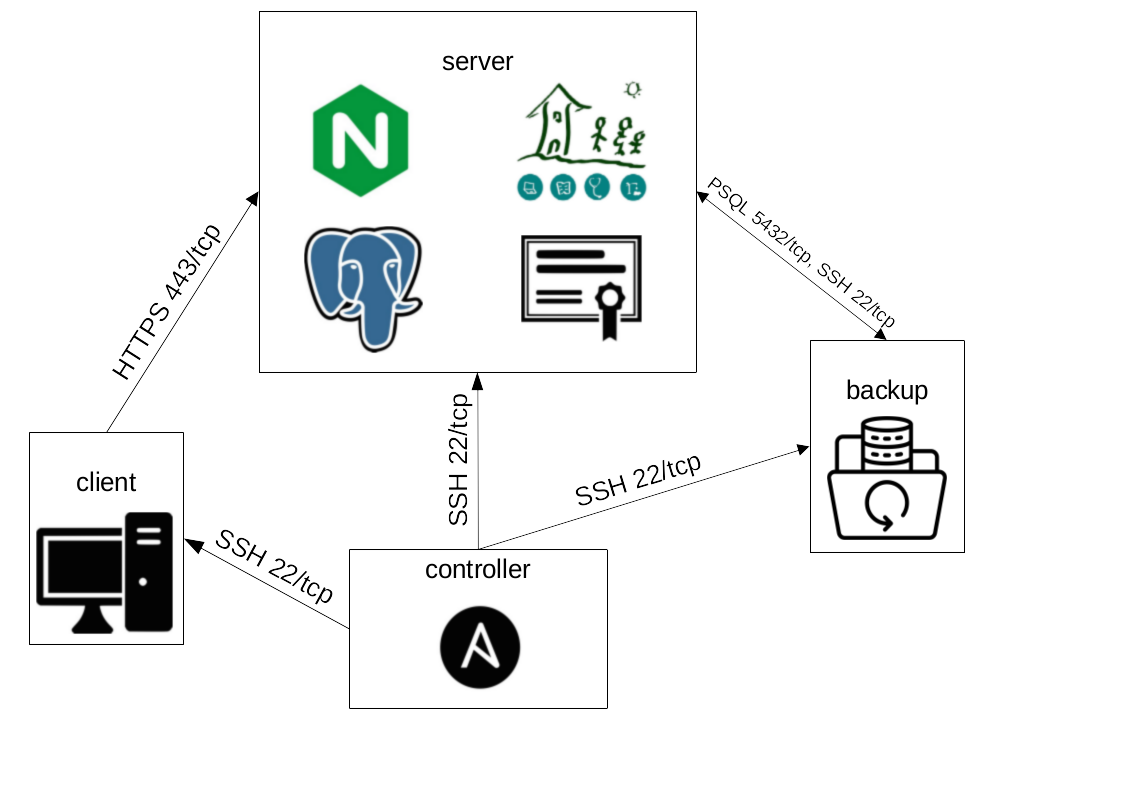

As some implementations have very low resources regarding hardware and system administrators this example is meant as a base for a GNU Health server with all in one system. Nevertheless the backup server is separated and the client(s) as well. Together with the Ansible controller the desired system looks like this:

Overview of the system to be created. The directed arrows represent connection requests that still expect responses.

In this example we are using the following host names associated with the following IP addresses:

server.incus: 10.5.242.110

backup.incus: 10.5.242.111

controller.incus: 10.5.242.112

client.incus: 10.5.242.113

You have to replace those by your given host names and IP addresses. Those machines are expected to be in place and accessible using SSH.

Complex scenarios like this example will only be developed and tested on Debian as we see in it the best balance between a robust system with a big community on the one hand and a philosophy following the principles of free software to the greatest extent on the other hand.

For further information about backups read the chapters Backup & Restore inside Security & System Administration and the corresponding Roles barman and restic.

4.2.1.1. Requirements

In order to follow this tutorial you need the following prerequisites:

4 machines as stated above (target SSH & sudo user assumed to have the same name across the systems)

An email address to be used for sending emails from the server. It’s highly recommended to not use it in another context - e.g. because the password will be stored on the server. This solution is easier to set up than a mail server but has a drawback: Check if your logfiles information are too sensitive for your email provider. This will be called source@example.com and you have to replace it by yours.

Any email address to receive emails from the server. That one could also be one actually in use. This will be called target@example.com and you have to replace it by yours.

First of all login to your controller to install the requirements and clone & enter this repository.

For this we create a directory outside the users home folder for the virtual python environment and the git repository owned by a newly created group ansible.

$ sudo apt update && sudo apt install -y sshpass python3-venv python3-pip git

$ sudo groupadd ansible

$ sudo usermod -aG ansible $USER

$ sudo mkdir /opt/ansible

$ sudo chown -R $USER:ansible /opt/ansible

$ sudo chmod 0775 /opt/ansible

$ newgrp ansible

$ groups

$ cd /opt/ansible

$ python3 -m venv venv

$ source venv/bin/activate

$ pip install ansible-core

$ ansible-galaxy collection install community.crypto community.general community.postgresql ansible.posix

$ git clone https://codeberg.org/gnuhealth/ansible.git gnuhealth-ansible

$ cd gnuhealth-ansible

4.2.1.2. Verify & Configure SSH

First we execute the SSH playbook in order to create a local SSH key pair and copy the public one in a desired directory:

$ ansible-playbook playbooks/ssh.yml -i inventories/dev -e inventory_hostname='controller.incus' -c local -e ssh_key_create=1 -e ssh_key_user=$USER -e ssh_key_fetch=1 -K

From now on we will copy the default inventory in another place in order to modify it:

$ cp -r inventories/dev/* inventories/prod/

For start we will modify the hosts file such that it looks like this:

[gnuhealth]

[gnuhealth:children]

gnuhealth_app

gnuhealth_psql

gnuhealth_nginx

gnuhealth_ownca

gnuhealth_bak

[gnuhealth_app]

server.incus ansible_port=22

[gnuhealth_psql]

server.incus ansible_port=22

[gnuhealth_nginx]

server.incus ansible_port=22

[gnuhealth_ownca]

server.incus ansible_port=22

[gnuhealth_bak]

backup.incus ansible_port=22

[desktop]

client.incus ansible_port=22

[application:children]

gnuhealth_app

[postgresql:children]

gnuhealth_psql

[nginx:children]

gnuhealth_nginx

[ownca:children]

gnuhealth_ownca

[bak:children]

gnuhealth_bak

[ssh]

[ssh:children]

gnuhealth

desktop

Next we get the servers host keys and verify that we can connect to them:

$ ssh-keyscan -H {client.incus,server.incus,backup.incus} | sudo tee -a /etc/ssh/ssh_known_hosts

$ ansible -i inventories/prod ssh -m ping -k

This is trust on first use (TOFU) so we do not manually verify the fingerprints. If desired get them while installation or any other way outside SSH and compare them. Then edit the following values like this in inventories/prod/group_vars/ssh/vars.yml:

ssh_username: debian

[...]

ssh_key_trust_local_paths:

- key: ../fetch/controller.incus.pub

user: debian

[...]

ssh_disable_pw_auth: true

For ssh_username & user you have to set your username that was set during the installation. debian is a placeholder that you will have to replace continuously. Note that the path ../fetch/controller.pub also depends on the host name of the controller.

Disabling the password authentication is a standard security measure but it might create a single point of failure out of the SSH key. Make sure to have an alternative - whether it is another SSH key, the host that spawned the machine you want to log into or a display and a keyboard that you could attach to the physical server.

Now we call the SSH playbook on the servers and client in order to trust the controllers SSH key and disable password based authentication:

$ ansible-playbook playbooks/ssh.yml -i inventories/prod -K -k

The flags -K and -k lead to prompts for the SSH and sudo password which are probably the same.

As we forced key based SSH authentication we will make sure that we can still connect:

$ ansible -i inventories/prod ssh -m ping

If SSH works well we overwrite the config file with the default one again because the SSH role is also called from other roles:

$ cp inventories/dev/group_vars/ssh/vars.yml inventories/prod/group_vars/ssh/vars.yml

4.2.1.3. Prepare inventory

Next step is to encrypt and edit the vault.yml for GNU Health as this will contain passwords that will be used or set later on. First generate a password and then run this commands:

$ ansible-vault encrypt inventories/prod/group_vars/gnuhealth/vault.yml

$ ansible-vault edit inventories/prod/group_vars/gnuhealth/vault.yml

In case you are not familiar with vim: Press i to start editing and Esc + wq + Enter to write and quit if you finished. We want the following changes in that file:

vault_ssh_user: debian

[...]

vault_ssh_args: '-o StrictHostKeyChecking=yes'

vault_psql_pw: 'rollastrongpasswordplease'

vault_psql_pw_barman: 'rollastrongpasswordplease'

vault_mail_pw: 'putactualemaildummypasswordhere'

vault_tryton_pw: 'rollastrongpasswordplease'

[...]

vault_rstc_pw: 'rollastrongpasswordplease'

Where vault_ssh_user is your username as you set previous for ssh_username and vault_mail_pw the actual password of your dummy email account that was mentionned in the prerequisites on top. Enabling host key checking makes sure you would notice if an attacker tries to mimic your server in the future. All the other values are for passwords to set later on thus roll them with your password manager.

Now we will modify plenty of values in the vars.yml configuration file for GNU Health:

[...]

gh_tryton_admin_mail: 'target@example.com'

[...]

psql_hba_rules:

- "local all postgres peer"

- "local health gnuhealth peer"

- "local template0,template1 gnuhealth peer"

- "hostssl all barman 10.5.242.111/32 scram-sha-256"

- "hostssl replication streaming_barman 10.5.242.111/32 scram-sha-256"

psql_set_encoding: true

psql_encoding: 'UTF-8'

psql_set_locale: true

psql_locale: 'en_US'

[...]

nginx_rproxy_domain: server.incus

[...]

backup: true

[...]

locale: true

<set en_US twice in lcl_* values>

[...]

ssmtp: true

ssmtp_mail_hub: "smtp.web.de:465"

ssmtp_mail_address: 'source@example.com'

[...]

logwatch: true

lw_mailto: 'target@example.com'

[...]

systemd_alert: true

sa_admin_mail: 'target@example.com'

[...]

timezone: true

tz_timezone: 'Europe/Berlin'

[...]

nftables: true

nft_network_interface: '{eth0}'

nft_internal_subnet: '10.5.242.0/24'

nft_allow_input_lines:

- "iif {{ nft_network_interface }} tcp dport 22 ip saddr { 10.5.242.110-10.5.242.112 } accept"

- "iif {{ nft_network_interface }} tcp dport 5432 ip saddr { 10.5.242.110/32,10.5.242.111/32 } accept"

- "iif {{ nft_network_interface }} tcp dport 443 ip saddr {{ nft_internal_subnet }} {{ 'accept' if 'nginx' in group_names else 'drop' }}"

nft_allow_input_lines host-wise by creating a directory host_vars next to group_vars.

In that case it should have a YAML file or folder with a name matching the servers hostname (see https://docs.ansible.com/ansible/latest/inventory_guide/intro_inventory.html#organizing-host-and-group-variables)If you want to look deeper in the configuration options you can also find all the roles variables documented in the roles chapter of this documentation.

4.2.1.4. Run the playbook

Now we are prepared to run the playbook in order to configure the main server and the backup system:

$ ansible-playbook playbooks/gnuhealth.yml -i inventories/prod -K --ask-vault-pass

Ansible aims to be idempotent which means that you always produce the same state even if you run a playbook multiple times. And you don’t want something to change something back and forth. Run the same command again and check that every single task is green / okay and not a single one yellow / changed (and not failed either of course).

4.2.1.5. Prepare desktop inventory

Similarly to the gnuhealth group we will now configure the configuration for the client before we run the desktop.yml playbook. Open the configuration file:

$ vim inventories/prod/group_vars/desktop/vault.yml

This value is only for connecting, not to create new passwords:

vault_ssh_user: debian

vault_ssh_args: '-o StrictHostKeyChecking=yes'

We will also set some values in the unencrypted configuration file:

ghcl_profile_name: Tutorial

ghcl_trust_certs: true

timezone: true

tz_timezone: 'Europe/Berlin'

nftables: true

nft_network_interface: '{eth0}'

nft_internal_subnet: '10.5.242.0/24'

nft_allow_input_lines:

- "iif {{ nft_network_interface }} tcp dport 22 ip saddr 10.5.242.112/32 accept"

The ghcl_profile_name will be displayed later on when using the client. It is necessary to trust the certificate that signed the one of the server but this is handled by the default configuration after setting ghcl_trust_certs to true. And we set a timezone again.

4.2.1.6. Run desktop playbook

Finally run this playbook twice as well and the system is set up:

$ ansible-playbook playbooks/desktop.yml -i inventories/prod -K

4.2.1.7. Check the new system

One obvious thing to test is if you can connect to the server from the client: Log in to the client, run the GNU Health client, click connect and enter the password that was set in gnuhealth/vault.yml as vault_tryton_pw. Follow the wizard to create an user and an institution. This is also useful for a restore test later on.

Then login to the server and check email alerts, locale and timezone:

$ sudo systemctl restart nginx postgresql gnuhealth

$ sudo logwatch

$ sudo su postgres -c "psql -l"

$ timedatectl

Now login to client and verify that the firewall configuration is working:

$ sudo apt install -y netcat-traditional

$ nc -vzw 2 server.incus 5432 < /dev/null

$ nc -vzw 2 backup.incus 5432 < /dev/null

$ nc -vzw 2 server.incus 22 < /dev/null

$ nc -vzw 2 backup.incus 22 < /dev/null

Those ports should all be closed for the client or any other system that is not one of themselves or the controller.

Apart from SSH we can also check if the GNU Health client is protected: Open the client and enter wrong credentials until you get an error stating ‘Too many requests’. By default 5 failed login attempts should lead to 5 minutes being banned. This can be configured in your trytond.conf. You might also want to check that only the target machine gets banned and not further systems in the same network.

Finally let’s perform a restore. Login to server, stop the services and delete all data:

$ sudo systemctl stop gnuhealth postgresql

$ sudo find /opt/gnuhealth/postgresql/15/main/ /opt/gnuhealth/his/data/attach/ -mindepth 1 -delete

Obviously you would not run this command on your productive system. This is just to test the restore functionality in time. If you have to perform a restore on the productive system, make sure to test it on another one before and move the data to another path instead of deleting it. Afterwards perform the restore from controller:

$ ansible-playbook playbooks/gnuhealth.yml -i inventories/prod/ -e brm_restore=1 -e rstc_client_restore=1 -e postgresql="" -e application="" -e nginx="" -K --ask-vault-pass

Then wait for PostgreSQL coming back and restart GNU Health once it’s up again:

$ sudo journalctl -fu postgresql

$ sudo systemctl restart gnuhealth

After that use the client to verify you can connect again and still have your data. Note that we only restored the data on your existing system and you still have to set up a strategy how to recreate the whole server with the existing data.

4.2.1.8. Testing with Incus

Before applying the above instructions you might want to try them in a testing setup. You could use Incus for this. Following steps require Incus to be installed. While the server could also be deployed in production this way, it would not make sense to have a client or backup system on the same system. Besides network segmentation is not targeted either.

The inventory that is continuously created in this example can also be created by running a BASH script from inside the repositories top level directory:

$ bash inventories/test/generate_gnuhealth_prod_small.sh

Make sure to replace inventory path inventories/prod by inventories/test/gnuhealth_prod_small throughout testing.

First create the instances. We can use containers instead of VMs except for the client:

$ incus launch images:debian/bookworm server -d root,size=20GiB -c limits.cpu=1 -c limits.memory=1GiB -d eth0,ipv4.address=10.5.242.110

$ incus launch images:debian/bookworm backup -d root,size=20GiB -c limits.cpu=1 -c limits.memory=1GiB -d eth0,ipv4.address=10.5.242.111

$ incus launch images:debian/bookworm controller -d root,size=20GiB -c limits.cpu=1 -c limits.memory=1GiB -d eth0,ipv4.address=10.5.242.112

$ incus launch images:debian/bookworm client -d root,size=20GiB -c limits.cpu=1 -c limits.memory=1GiB -d eth0,ipv4.address=10.5.242.113 --vm

Next prepare every single instance such that it has a user, SSH and Python:

$ incus shell <instance>

# adduser debian

-> set password, the rest is optional and you can leave it empty

# usermod -aG sudo debian

# apt update && apt install -y openssh-server python3

# exit

Now install a GUI for the client:

$ incus shell client

# apt install -y xorg mate-desktop-environment

# exit

Verify it’s working:

$ incus console client --type vga

-> username: debian, password: you just set it

$ startx

-> close session if GUI is working

Log in to controller:

$ incus shell controller

# su debian

$ ...

From now on you can follow the instructions from above.