4.2.3. Community Server

This is a recipe to set up a community server which is not only running GNU Health HIS but all related services from this project.

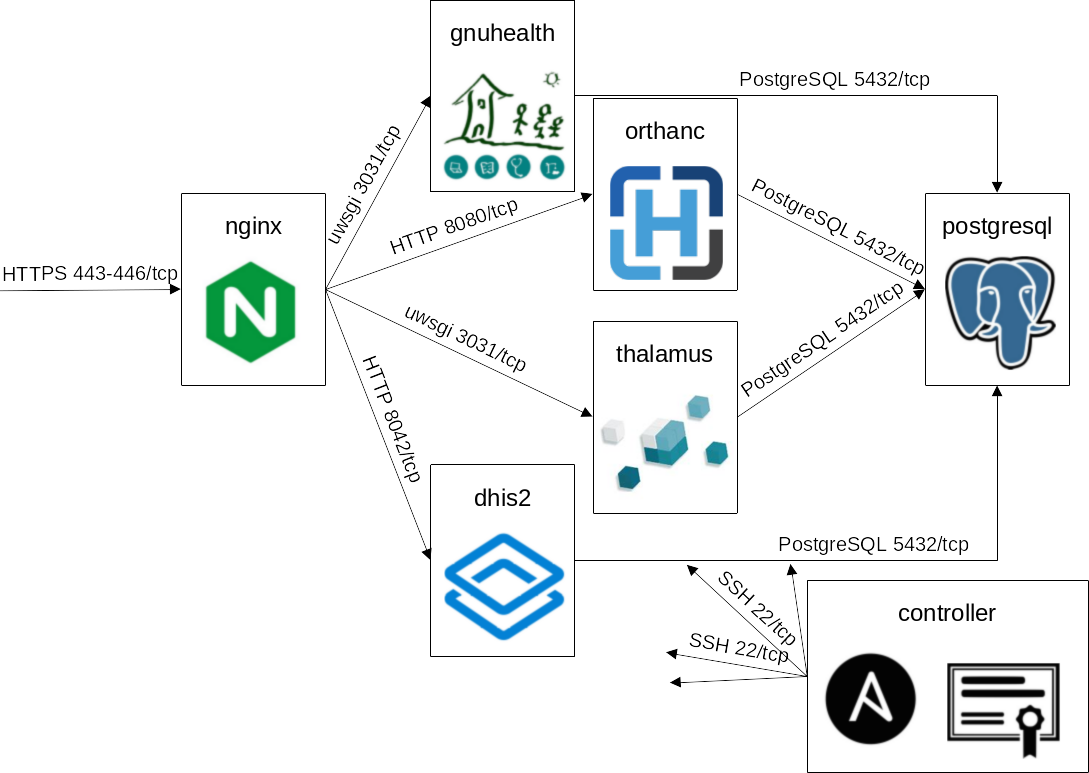

It contains a shared web server and database server but distinct application servers:

Community server based on different Incus containers. The directed arrows represent connection requests that still expect responses.

The controller in the bottom right connects to all instances using SSH and holds the Certificate Authority (CA) if necessary.

The Incus base can be created with a shell script (requires Incus being installed and set up, see previous example Examples -> Production -> Extended):

$ wget https://codeberg.org/gnuhealth/ansible/raw/branch/main/inventories/test/generate_community_server_incus.sh

$ cat generate_community_server_incus.sh

$ bash inventories/test/generate_community_server_incus.sh

Afterwards start from the controller and set a password inside:

$ incus shell controller

# passwd debian

-> role, set and store this password

# su debian

$ cd

Next install the requirements from the first productive example.

Then continue inside /opt/ansible/gnuhealth-ansible on controller by handling the passwords:

$ for instance in "postgresql" "gnuhealth" "orthanc" "thalamus" "dhis2" "nginx"; do (cat ~/${instance}.pw >> inventories/test/community_server/host_vars/${instance}.incus.yml) && sudo rm ~/${instance}.pw; done

$ echo "postgresql_pw: $(tr -dc A-Za-z0-9 </dev/urandom | head -c 20; echo)" >> inventories/test/community_server/group_vars/all/vault.yml

$ read -s -p "Vault password:" VAULT_PW

$ echo $VAULT_PW > passwordfile

$ for yml in $(ls inventories/test/community_server/host_vars/*.yml); do ansible-vault encrypt $yml --vault-password-file passwordfile; done

$ ansible-vault encrypt inventories/test/community_server/group_vars/all/vault.yml --vault-password-file passwordfile

$ rm passwordfile

The passwords in ~/${instance}.pw were created from the shell script generate_community_server_incus.sh.

Another password for PostgreSQL usage is directly created.

The prompt Vault password requires a password that you role and store somewhere else. It is used to encrypt all the other passwords using Ansible Vault.

Now start by testing connectivity:

$ ansible -m command -a "apt update" -i inventories/test/community_server all --become --ask-vault-pass

As the services should be accessible from outside we create proxies on the Incus host (port 80 needed for Let’s Encrypt, config here implies HTTP redirect):

$ for port in {80,443,444,445,446}; do incus config device add nginx port${port} proxy listen=tcp:0.0.0.0:${port} connect=tcp:127.0.0.1:${port}; done

And finally we run the playbook from the controller:

$ ansible-playbook playbooks/community_server.yml -i inventories/test/community_server/ -e ansible_user=debian --ask-vault-pass

If everything went fine and we could connect to the services, we create snapshots (from the Incus host):

$ for instance in {dhis2,gnuhealth,orthanc,postgresql,thalamus,nginx}; do incus snapshot create $instance prepared; done

And create a shell script executed as cron job to reset the VMs to their snapshots:

cat > reset_community_server_vms.sh <<'EOF'

#!/bin/bash

set -e

date

incus project switch comserv

incus exec nginx -- /bin/bash -c "apt update && apt upgrade -y"

incus stop nginx

for instance in {dhis2,gnuhealth,orthanc,postgresql,thalamus}; do

incus snapshot restore $instance prepared

incus exec $instance -- /bin/bash -c "apt update && apt upgrade -y"

incus snapshot create $instance prepared --reuse

done

incus start nginx

EOF

crontab -e

-> add a line to the bottom "0 2 * * * bash /home/debian/reset_community_server_vms.sh >> /home/debian/reset_community_server_vms.log"

Warning

This was tested in a Incus network where IPv6 is disabled - otherwise PostgreSQL related tasks might fail as HBA rules are based on IPv4 addresses currently.